Understanding the Differences Between LLM Chains and LLM Agent Executors in LangChain using Tools

LangChain has emerged as a robust framework for building applications powered by large language models (LLMs). Two fundamental concepts within LangChain are LLM Chains and LLM Agent Executors, both of which leverage tools to enhance the capabilities of LLMs. While they may seem similar at first glance, understanding their differences is crucial for developers aiming to harness LangChain's full potential.

In this blog post, we’ll explore the distinctions between LLM Chains and LLM Agent Executors, their operational structures, functionalities, and ideal use cases.

LLM Chains Using Tools

LLM Chains represent a sequence of operations where an LLM processes input, potentially invokes one or more tools and generates output based on the results of those tool invocations.

Key Characteristics of LLM Chains

- Sequential Execution: The chain linearly executes actions. Each tool is called in response to the LLM’s output, and the results are fed into the next step of the chain.

- Simpler Logic: The logic for determining which tool to invoke is often predefined or straightforward, relying heavily on the LLM’s output to dictate subsequent actions.

- Single Tool Invocation: Typically involves invoking one tool at a time, using methods like

invoke, which allows for direct interaction with tools based on the LLM's output.

import os

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.tools import Tool

from langchain_core.runnables import RunnableSequence

from pygments import highlight, lexers, formatters

import json

# Initialize the ChatOpenAI model

llm = ChatOpenAI(model_name="gpt-4", max_tokens=1024, api_key=os.environ.get("OPENAI_API_KEY"))

# Define a simple word count function as a tool

def count_words(text: str) -> int:

return len(text.split())

# Create a tool for counting words

word_count_tool = Tool(

name="word_count",

description="Counts the number of words in a given text.",

func=count_words,

)

# Define the prompt template for translation

translation_prompt = ChatPromptTemplate(

input_variables=["text"],

messages=[

("system", "Translate the following text to French: {text}. The output must be: <translated_text>"),

("human", "{text}")

]

)

# Define the prompt template for summarization

summarization_prompt = ChatPromptTemplate(

input_variables=["text"],

messages=[

("system", "Summarize the following text: {translated_text} and calculate the word count. The output must be: <summarized_text in HTML format> and <word_count> words."),

("human", "{translated_text}")

]

)

# Bind the word count tool to the LLM

llm_with_tools = llm.bind_tools([word_count_tool])

# Combine all into a single sequence

full_chain = (

translation_prompt | llm | summarization_prompt | llm_with_tools

)

# Input text to be translated and summarized

input_text = """Artificial intelligence is transforming industries by enabling smarter decision-making.

AI is a powerful tool that can help businesses improve efficiency and productivity.

Machine learning is a subset of AI that focuses on training machines to learn from data

and make predictions. Deep learning is a type of machine learning that uses neural networks

to model complex patterns in large datasets. AI is revolutionizing the way companies operate

and is driving innovation across all sectors."""

# Execute the chain with input text

result = full_chain.invoke({"text": input_text})

print("Result Type:", type(result))

print("Result Content:", result)

#exit()

# Convert the result to a serializable dictionary (extract content from the result object)

result_dict = {

"content": result.content,

"additional_kwargs": result.additional_kwargs,

"response_metadata": result.response_metadata

}

# Convert the Python dict to a JSON-formatted string

json_str = json.dumps(result_dict, indent=4)

# Highlight the JSON string using pygments

colored_json = highlight(json_str, lexers.JsonLexer(), formatters.TerminalFormatter())

# Print the colored JSON

print(colored_json)

print("Result Content:", result.content)The provided example demonstrates the characteristics of an LLM Chain through a simple, linear process. It satisfies Sequential Execution by executing tasks one after another in a set sequence: the input text is first translated, and then the translated text is summarized and passed to the word count tool. Each step is dependent on the output from the previous step, forming a clear linear progression.

Simpler Logic is evident because the logic is predefined and straightforward. The LLM processes the input, invokes the translation tool, and passes the translated result to the summarization tool, all in a predefined order without any dynamic decision-making.

Single Tool Invocation is also followed in this example. Only one tool (the word count tool) is invoked at a time after the LLM generates output. Each tool is called separately based on the LLM’s output, making the overall logic simple and sequential, characteristic of an LLM Chain.

Here is an example of output:

(scalexi_chatbot) Aniss-MacBook-Pro:dev akoubaa$ python 01_llm_chain.py

Result Type: <class 'langchain_core.messages.ai.AIMessage'>

Result Content: content='Artificial Intelligence (AI) is transforming industries by enabling smarter decision-making. It is a powerful tool that can help companies improve their efficiency and productivity. Machine learning, a subset of AI, focuses on training machines to learn from data and make predictions. Deep learning, a type of machine learning, uses neural networks to model complex patterns in large data sets. AI is revolutionizing the way companies operate and is stimulating innovation in all sectors.\n\nWord count: 63 words' additional_kwargs={'refusal': None} response_metadata={'token_usage': {'completion_tokens': 94, 'prompt_tokens': 793, 'total_tokens': 887, 'completion_tokens_details': {'audio_tokens': None, 'reasoning_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': None, 'cached_tokens': 0}}, 'model_name': 'gpt-4-0613', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None} id='run-2285c239-291a-4492-a049-6ed2beaef747-0' usage_metadata={'input_tokens': 793, 'output_tokens': 94, 'total_tokens': 887, 'input_token_details': {'cache_read': 0}, 'output_token_details': {'reasoning': 0}}

{

"content": "Artificial Intelligence (AI) is transforming industries by enabling smarter decision-making. It is a powerful tool that can help companies improve their efficiency and productivity. Machine learning, a subset of AI, focuses on training machines to learn from data and make predictions. Deep learning, a type of machine learning, uses neural networks to model complex patterns in large data sets. AI is revolutionizing the way companies operate and is stimulating innovation in all sectors.\n\nWord count: 63 words",

"additional_kwargs": {

"refusal": null

},

"response_metadata": {

"token_usage": {

"completion_tokens": 94,

"prompt_tokens": 793,

"total_tokens": 887,

"completion_tokens_details": {

"audio_tokens": null,

"reasoning_tokens": 0

},

"prompt_tokens_details": {

"audio_tokens": null,

"cached_tokens": 0

}

},

"model_name": "gpt-4-0613",

"system_fingerprint": null,

"finish_reason": "stop",

"logprobs": null

}

}

Result Content: Artificial Intelligence (AI) is transforming industries by enabling smarter decision-making. It is a powerful tool that can help companies improve their efficiency and productivity. Machine learning, a subset of AI, focuses on training machines to learn from data and make predictions. Deep learning, a type of machine learning, uses neural networks to model complex patterns in large data sets. AI is revolutionizing the way companies operate and is stimulating innovation in all sectors.

Word count: 63 wordsLLM Agent Executors Using Tools

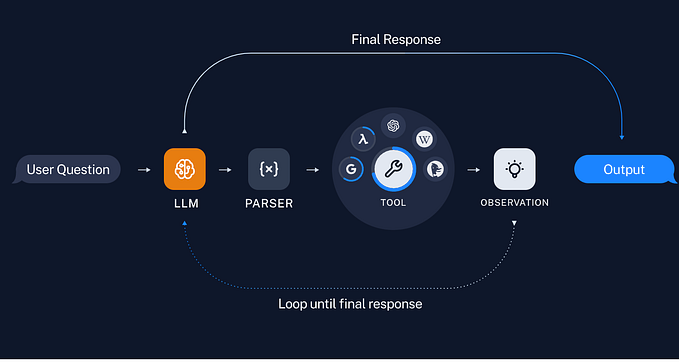

LLM Agent Executors introduce a more complex layer of decision-making and execution. Agents are designed to reason about which actions to take based on input and can decide to invoke multiple tools as needed.

Key Characteristics of LLM Agent Executors

- Dynamic Decision-Making: Agents can perform multi-step reasoning, allowing them to decide dynamically which tools to invoke and in what order.

- Concurrent Tool Management: The agent executor can manage multiple tools simultaneously, allowing for concurrent execution of tasks when appropriate.

- Complex Logic Handling: Capable of handling tasks that require conditional logic, loops, and other programming constructs that go beyond sequential execution.

Example of an LLM Agent Executor

Imagine an agent designed to answer weather-related queries and provide Wikipedia summaries:

- Data Gathering: The agent uses two tools — one to fetch current weather information based on geographical coordinates and another to search Wikipedia for information. For example, when asked about the temperature in a specific location, it uses the weather tool to fetch live data from the OpenMeteo API. Simultaneously, it can search Wikipedia for information on people or topics using the Wikipedia tool.

- Reasoning: The agent evaluates the input to decide which tool to invoke. For weather queries, it automatically calls the weather tool with the provided coordinates. For general knowledge queries, it invokes the Wikipedia tool to retrieve and summarize relevant articles.

- Decision Making: The agent can decide to invoke multiple tools in response to user input. For example, when asked about Albert Einstein, it dynamically invokes the Wikipedia tool to return the most relevant summaries.

- Output: The LLM provides the final output after combining the results from the tools invoked. It delivers either weather data or informative Wikipedia summaries based on the input query.

In this scenario, the agent dynamically chooses which tool to use and manages concurrent data sources, demonstrating reasoning and flexibility in decision-making.

import os

import requests

import datetime

from typing import Dict, Any

import wikipedia

from langchain_core.tools import tool

from langchain.agents import create_tool_calling_agent, AgentExecutor

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

import logging

# Initialize logger

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class WeatherAgentConfig:

def __init__(self):

self.base_url = os.getenv('OPENMETEO_BASE_URL', 'https://api.open-meteo.com/v1/forecast')

def get_api_endpoint(self):

return self.base_url

class WeatherAgent:

def __init__(self):

self.config = WeatherAgentConfig()

def get_current_temperature(self, latitude: float, longitude: float) -> str:

"""Fetch the current temperature for a given latitude and longitude."""

logger.info(f"Fetching current temperature for coordinates: {latitude}, {longitude}")

try:

params = {

'latitude': latitude,

'longitude': longitude,

'hourly': 'temperature_2m',

'forecast_days': 1,

}

response = requests.get(self.config.get_api_endpoint(), params=params)

response.raise_for_status()

results = response.json()

current_utc_time = datetime.datetime.utcnow()

time_list = [datetime.datetime.fromisoformat(time_str.replace('Z', '+00:00')) for time_str in results['hourly']['time']]

temperature_list = results['hourly']['temperature_2m']

closest_time_index = min(range(len(time_list)), key=lambda i: abs(time_list[i] - current_utc_time))

current_temperature = temperature_list[closest_time_index]

logger.info(f"The current temperature is {current_temperature}°C")

return f'The current temperature is {current_temperature}°C'

except requests.RequestException as e:

logger.error(f"Failed to fetch current temperature: {e}")

return f"Failed to fetch current temperature: {e}"

class WikipediaAgent:

def __init__(self):

wikipedia.set_lang("en")

def search(self, query: str) -> str:

"""Run Wikipedia search and get page summaries."""

logger.info(f"Searching Wikipedia for: {query}")

try:

page_titles = wikipedia.search(query)

summaries = []

for page_title in page_titles[:3]:

try:

wiki_page = wikipedia.page(title=page_title, auto_suggest=False)

summaries.append(f"Page: {page_title}\nSummary: {wiki_page.summary}")

except wikipedia.exceptions.DisambiguationError as e:

summaries.append(f"Disambiguation for {page_title}: {', '.join(e.options[:5])}")

except wikipedia.exceptions.PageError:

continue

if not summaries:

logger.info("No good Wikipedia Search Result was found")

return "No good Wikipedia Search Result was found"

logger.info(f"Wikipedia Search Result: {summaries}")

return "\n\n".join(summaries)

except Exception as e:

logger.error(f"An error occurred while searching Wikipedia: {e}")

return f"An error occurred while searching Wikipedia: {e}"

@tool

def weather_tool(lat_lon: str) -> str:

"""Get the current temperature based on latitude and longitude."""

return WeatherAgent().get_current_temperature(*map(float, lat_lon.split(',')))

@tool

def wiki_tool(query: str) -> str:

"""Search for articles on Wikipedia and get summaries."""

return WikipediaAgent().search(query)

from langchain_core.prompts import MessagesPlaceholder

def create_agent_executor() -> AgentExecutor:

prompt_template = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant that can provide weather information or search Wikipedia."),

("human", "{input}"),

MessagesPlaceholder("agent_scratchpad"), # Add this line

])

llm = ChatOpenAI(model_name="gpt-4", max_tokens=1024)

tools = [weather_tool, wiki_tool]

agent = create_tool_calling_agent(llm, tools=tools, prompt=prompt_template)

return AgentExecutor(agent=agent, tools=tools, verbose=True)

def main():

agent_executor = create_agent_executor()

queries = [

"What's the temperature in Riyadh? Use coordinates 24.7136, 46.6753.",

"Tell me about Albert Einstein."

]

for query in queries:

result = agent_executor.invoke({"input": query})

print(f"Query: {query}")

print(f"Result: {result['output']}\n")

if __name__ == "__main__":

main()This LLM Agent differs from an LLM Chain by utilizing dynamic decision-making to reason about the query and invoke the appropriate tool based on the user’s request instead of following a fixed, sequential process. In an LLM Chain, the execution is linear, with predefined logic guiding each step, and the tools are invoked one at a time in a specific order.

In contrast, this agent, created using LangChain, operates more like an autonomous decision-maker. It evaluates the user’s input to decide which tool or function to execute. For example, if the query is about weather, the agent identifies the need for weather data and dynamically invokes the weather_tool to fetch the temperature for the specified coordinates. If the query is about a person or topic, the agent invokes the wiki_tool to search Wikipedia and retrieve relevant summaries.

This approach is flexible, as the agent is not locked into a single tool or a fixed sequence of operations. It selects the correct tool based on the query context, reasoning about what information is needed and adjusting its behavior accordingly. This ability to reason and adapt to the query in real-time, managing multiple tools simultaneously, is what distinguishes the agent from the more rigid, step-by-step nature of an LLM Chain.

Here is a sample output of Agent Executor

> Entering new AgentExecutor chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Invoking: `weather_tool` with `{'lat_lon': '24.7136, 46.6753'}`

INFO:__main__:Fetching current temperature for coordinates: 24.7136, 46.6753

INFO:__main__:The current temperature is 36.2°C

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

The current temperature is 36.2°CThe current temperature in Riyadh is 36.2°C.

> Finished chain.

Query: What's the temperature in Riyadh? Use coordinates 24.7136, 46.6753.

Result: The current temperature in Riyadh is 36.2°C.

> Entering new AgentExecutor chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Invoking: `wiki_tool` with `{'query': 'Albert Einstein'}`

INFO:__main__:Searching Wikipedia for: Albert Einstein

INFO:__main__:Wikipedia Search Result: ['Page: Albert Einstein\nSummary: Albert Einstein ( EYEN-styne; German: [ˈalbɛɐt ˈʔaɪnʃtaɪn] ; 14 March 1879 – 18 April 1955) was a German-born theoretical physicist who is widely held as one of the most influential scientists. Best known for developing the theory of relativity, Einstein also made important contributions to quantum mechanics. His mass–energy equivalence formula E = mc2, which arises from special relativity, has been called "the world\'s most famous equation". He received the 1921 Nobel Prize in Physics "for his services to theoretical physics, and especially for his discovery of the law of the photoelectric effect", a pivotal step in the development of quantum theory.\nBorn in the German Empire, Einstein moved to Switzerland in 1895, forsaking his German citizenship (as a subject of the Kingdom of Württemberg) the following year. In 1897, at the age of seventeen, he enrolled in the mathematics and physics teaching diploma program at the Swiss federal polytechnic school in Zürich, graduating in 1900. In 1901, he acquired Swiss citizenship, which he kept for the rest of his life. In 1903, he secured a permanent position at the Swiss Patent Office in Bern. In 1905, he submitted a successful PhD dissertation to the University of Zurich. In 1914, he moved to Berlin in order to join the Prussian Academy of Sciences and the Humboldt University of Berlin. In 1917, he became director of the Kaiser Wilhelm Institute for Physics; he also became a German citizen again, this time as a subject of the Kingdom of Prussia. In 1933, while Einstein was visiting the United States, Adolf Hitler came to power in Germany. Horrified by the Nazi war of extermination against his fellow Jews, Einstein decided to remain in the US, and was granted American citizenship in 1940. On the eve of World War II, he endorsed a letter to President Franklin D. Roosevelt alerting him to the potential German nuclear weapons program and recommended that the US begin similar research. Einstein supported the Allies but generally viewed the idea of nuclear weapons with great dismay.\nEinstein\'s work is also known for its influence on the philosophy of science. In 1905, he published four groundbreaking papers, sometimes described as his annus mirabilis (miracle year). These papers outlined a theory of the photoelectric effect, explained Brownian motion, introduced his special theory of relativity—a theory which addressed the inability of classical mechanics to account satisfactorily for the behavior of the electromagnetic field—and demonstrated that if the special theory is correct, mass and energy are equivalent to each other. In 1915, he proposed a general theory of relativity that extended his system of mechanics to incorporate gravitation. A cosmological paper that he published the following year laid out the implications of general relativity for the modeling of the structure and evolution of the universe as a whole.\nIn the middle part of his career, Einstein made important contributions to statistical mechanics and quantum theory. Especially notable was his work on the quantum physics of radiation, in which light consists of particles, subsequently called photons. With the Indian physicist Satyendra Nath Bose, he laid the groundwork for Bose-Einstein statistics. For much of the last phase of his academic life, Einstein worked on two endeavors that proved ultimately unsuccessful. First, he advocated against quantum theory\'s introduction of fundamental randomness into science\'s picture of the world, objecting that "God does not play dice". Second, he attempted to devise a unified field theory by generalizing his geometric theory of gravitation to include electromagnetism too. As a result, he became increasingly isolated from the mainstream modern physics. His intellectual achievements and originality made Einstein broadly synonymous with genius. In 1999, he was named Time\'s Person of the Century. In a 1999 poll of 130 leading physicists worldwide by the British journal Physics World, Einstein was ranked the greatest physicist of all time.', "Page: Einstein family\nSummary: The Einstein family is the family of physicist Albert Einstein (1879–1955). Einstein's great-great-great-great-grandfather, Jakob Weil, was his oldest recorded relative, born in the late 17th century, and the family continues to this day. Albert Einstein's great-great-grandfather, Löb Moses Sontheimer (1745–1831), was also the grandfather of the tenor Heinrich Sontheim (1820–1912) of Stuttgart.\nAlbert's three children were from his relationship with his first wife, Mileva Marić, his daughter Lieserl being born a year before they married. Albert Einstein's second wife was Elsa Einstein, whose mother Fanny Koch was the sister of Albert's mother, and whose father, Rudolf Einstein, was the son of Raphael Einstein, a brother of Albert's paternal grandfather. Albert and Elsa were thus first cousins through their mothers and second cousins through their fathers.", 'Page: Hans Albert Einstein\nSummary: Hans Albert Einstein (May 14, 1904 – July 26, 1973) was a Swiss-American engineer and educator of German and Serbian origin, the second child and first son of physicists Albert Einstein and Mileva Marić. He was a long-time professor of hydraulic engineering at the University of California, Berkeley.\nEinstein was widely recognized for his research on sediment transport. To honor his outstanding achievement in hydraulic engineering, the American Society of Civil Engineers established the "Hans Albert Einstein Award" in 1988 and the annual award is given to those who have made significant contributions to the field.\n\n']

Page: Albert Einstein

Summary: Albert Einstein ( EYEN-styne; German: [ˈalbɛɐt ˈʔaɪnʃtaɪn] ; 14 March 1879 – 18 April 1955) was a German-born theoretical physicist who is widely held as one of the most influential scientists. Best known for developing the theory of relativity, Einstein also made important contributions to quantum mechanics. His mass–energy equivalence formula E = mc2, which arises from special relativity, has been called "the world's most famous equation". He received the 1921 Nobel Prize in Physics "for his services to theoretical physics, and especially for his discovery of the law of the photoelectric effect", a pivotal step in the development of quantum theory.

Born in the German Empire, Einstein moved to Switzerland in 1895, forsaking his German citizenship (as a subject of the Kingdom of Württemberg) the following year. In 1897, at the age of seventeen, he enrolled in the mathematics and physics teaching diploma program at the Swiss federal polytechnic school in Zürich, graduating in 1900. In 1901, he acquired Swiss citizenship, which he kept for the rest of his life. In 1903, he secured a permanent position at the Swiss Patent Office in Bern. In 1905, he submitted a successful PhD dissertation to the University of Zurich. In 1914, he moved to Berlin in order to join the Prussian Academy of Sciences and the Humboldt University of Berlin. In 1917, he became director of the Kaiser Wilhelm Institute for Physics; he also became a German citizen again, this time as a subject of the Kingdom of Prussia. In 1933, while Einstein was visiting the United States, Adolf Hitler came to power in Germany. Horrified by the Nazi war of extermination against his fellow Jews, Einstein decided to remain in the US, and was granted American citizenship in 1940. On the eve of World War II, he endorsed a letter to President Franklin D. Roosevelt alerting him to the potential German nuclear weapons program and recommended that the US begin similar research. Einstein supported the Allies but generally viewed the idea of nuclear weapons with great dismay.

Einstein's work is also known for its influence on the philosophy of science. In 1905, he published four groundbreaking papers, sometimes described as his annus mirabilis (miracle year). These papers outlined a theory of the photoelectric effect, explained Brownian motion, introduced his special theory of relativity—a theory which addressed the inability of classical mechanics to account satisfactorily for the behavior of the electromagnetic field—and demonstrated that if the special theory is correct, mass and energy are equivalent to each other. In 1915, he proposed a general theory of relativity that extended his system of mechanics to incorporate gravitation. A cosmological paper that he published the following year laid out the implications of general relativity for the modeling of the structure and evolution of the universe as a whole.

In the middle part of his career, Einstein made important contributions to statistical mechanics and quantum theory. Especially notable was his work on the quantum physics of radiation, in which light consists of particles, subsequently called photons. With the Indian physicist Satyendra Nath Bose, he laid the groundwork for Bose-Einstein statistics. For much of the last phase of his academic life, Einstein worked on two endeavors that proved ultimately unsuccessful. First, he advocated against quantum theory's introduction of fundamental randomness into science's picture of the world, objecting that "God does not play dice". Second, he attempted to devise a unified field theory by generalizing his geometric theory of gravitation to include electromagnetism too. As a result, he became increasingly isolated from the mainstream modern physics. His intellectual achievements and originality made Einstein broadly synonymous with genius. In 1999, he was named Time's Person of the Century. In a 1999 poll of 130 leading physicists worldwide by the British journal Physics World, Einstein was ranked the greatest physicist of all time.

Page: Einstein family

Summary: The Einstein family is the family of physicist Albert Einstein (1879–1955). Einstein's great-great-great-great-grandfather, Jakob Weil, was his oldest recorded relative, born in the late 17th century, and the family continues to this day. Albert Einstein's great-great-grandfather, Löb Moses Sontheimer (1745–1831), was also the grandfather of the tenor Heinrich Sontheim (1820–1912) of Stuttgart.

Albert's three children were from his relationship with his first wife, Mileva Marić, his daughter Lieserl being born a year before they married. Albert Einstein's second wife was Elsa Einstein, whose mother Fanny Koch was the sister of Albert's mother, and whose father, Rudolf Einstein, was the son of Raphael Einstein, a brother of Albert's paternal grandfather. Albert and Elsa were thus first cousins through their mothers and second cousins through their fathers.

Page: Hans Albert Einstein

Summary: Hans Albert Einstein (May 14, 1904 – July 26, 1973) was a Swiss-American engineer and educator of German and Serbian origin, the second child and first son of physicists Albert Einstein and Mileva Marić. He was a long-time professor of hydraulic engineering at the University of California, Berkeley.

Einstein was widely recognized for his research on sediment transport. To honor his outstanding achievement in hydraulic engineering, the American Society of Civil Engineers established the "Hans Albert Einstein Award" in 1988 and the annual award is given to those who have made significant contributions to the field.

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Albert Einstein (14 March 1879 – 18 April 1955) was a German-born theoretical physicist who is widely regarded as one of the most influential scientists. He is best known for developing the theory of relativity, and also made important contributions to quantum mechanics. His mass–energy equivalence formula E = mc2, which arises from special relativity, has been called "the world's most famous equation". He received the 1921 Nobel Prize in Physics "for his services to theoretical physics, and especially for his discovery of the law of the photoelectric effect", a pivotal step in the development of quantum theory.

Born in the German Empire, Einstein moved to Switzerland in 1895 and renounced his German citizenship the following year. He enrolled in the mathematics and physics teaching diploma program at the Swiss federal polytechnic school in Zürich at the age of seventeen, and graduated in 1900. In 1901, he acquired Swiss citizenship, which he kept for the rest of his life. In 1903, he secured a permanent position at the Swiss Patent Office in Bern.

In 1914, he moved to Berlin to join the Prussian Academy of Sciences and the Humboldt University of Berlin. He also became a German citizen again, this time as a subject of the Kingdom of Prussia. In 1933, while Einstein was visiting the United States, Adolf Hitler came to power in Germany. Einstein decided to stay in the US, and was granted American citizenship in 1940.

Einstein's work is also known for its influence on the philosophy of science. In 1905, he published four groundbreaking papers, sometimes described as his annus mirabilis (miracle year). These papers outlined a theory of the photoelectric effect, explained Brownian motion, introduced his special theory of relativity, and demonstrated that if the special theory is correct, mass and energy are equivalent to each other.

Einstein had three children from his relationship with his first wife, Mileva Marić. His second wife was Elsa Einstein, who was also his cousin.

His son, Hans Albert Einstein (May 14, 1904 – July 26, 1973), was a Swiss-American engineer and educator. He was a long-time professor of hydraulic engineering at the University of California, Berkeley, and was widely recognized for his research on sediment transport.

Albert Einstein is remembered not only for his significant contributions to science but also for his influence on the philosophy of science. He was named Time's Person of the Century in 1999, and in a poll of 130 leading physicists worldwide by the British journal Physics World, he was ranked the greatest physicist of all time.

> Finished chain.

Query: Tell me about Albert Einstein.

Result: Albert Einstein (14 March 1879 – 18 April 1955) was a German-born theoretical physicist who is widely regarded as one of the most influential scientists. He is best known for developing the theory of relativity, and also made important contributions to quantum mechanics. His mass–energy equivalence formula E = mc2, which arises from special relativity, has been called "the world's most famous equation". He received the 1921 Nobel Prize in Physics "for his services to theoretical physics, and especially for his discovery of the law of the photoelectric effect", a pivotal step in the development of quantum theory.

Born in the German Empire, Einstein moved to Switzerland in 1895 and renounced his German citizenship the following year. He enrolled in the mathematics and physics teaching diploma program at the Swiss federal polytechnic school in Zürich at the age of seventeen, and graduated in 1900. In 1901, he acquired Swiss citizenship, which he kept for the rest of his life. In 1903, he secured a permanent position at the Swiss Patent Office in Bern.

In 1914, he moved to Berlin to join the Prussian Academy of Sciences and the Humboldt University of Berlin. He also became a German citizen again, this time as a subject of the Kingdom of Prussia. In 1933, while Einstein was visiting the United States, Adolf Hitler came to power in Germany. Einstein decided to stay in the US, and was granted American citizenship in 1940.

Einstein's work is also known for its influence on the philosophy of science. In 1905, he published four groundbreaking papers, sometimes described as his annus mirabilis (miracle year). These papers outlined a theory of the photoelectric effect, explained Brownian motion, introduced his special theory of relativity, and demonstrated that if the special theory is correct, mass and energy are equivalent to each other.

Einstein had three children from his relationship with his first wife, Mileva Marić. His second wife was Elsa Einstein, who was also his cousin.

His son, Hans Albert Einstein (May 14, 1904 – July 26, 1973), was a Swiss-American engineer and educator. He was a long-time professor of hydraulic engineering at the University of California, Berkeley, and was widely recognized for his research on sediment transport.

Albert Einstein is remembered not only for his significant contributions to science but also for his influence on the philosophy of science. He was named Time's Person of the Century in 1999, and in a poll of 130 leading physicists worldwide by the British journal Physics World, he was ranked the greatest physicist of all time.Comparative Table

To summarize the differences, here’s a comparative table highlighting the key distinctions between LLM Chains and LLM Agent Executors:

FeatureLLM Chains Using ToolsLLM Agent Executors Using ToolsExecution StyleSequentialDynamic/ConcurrentDecision-MakingPredefined LogicReasoning-BasedTool InvocationOne at a TimeMultiple SimultaneouslyComplexitySimplerMore ComplexUse CasesLinear TasksComplex, Multi-Step TasksFlexibilityLess FlexibleHighly FlexibleImplementationEasierRequires Advanced Setup

When to Use Which

LLM Chains

Ideal For:

LLM Chains are most effective for tasks that follow a predefined, linear sequence of operations. These tasks are simple in nature, with no need for dynamic decision-making or handling unexpected inputs. The flow is predictable, where each step naturally follows the previous one, making it suitable for tasks where you know exactly what to do in advance.

Advantages:

- Simplicity: Easier to implement and debug due to the straightforward, linear logic.

- Efficiency: Lower computational overhead since only one tool is invoked at a time in a sequential manner.

Examples:

- Data Preprocessing Pipelines: Where each step (e.g., cleaning, transforming, normalizing) depends on the outcome of the previous one.

- Sequential Data Transformations: These are tasks like translating a document and then summarizing it, where the steps happen in a known, fixed order.

LLM Agent Executors

Ideal For:

LLM Agent Executors shine in scenarios that require flexibility, reasoning, and dynamic decision-making. They are best suited for complex tasks where the sequence of operations is not predefined, and the agent needs to decide in real-time which tools to invoke based on the user’s input. They excel in handling multi-step reasoning, integrating various data sources, and adapting to changing conditions.

Advantages:

- Dynamic Decision-Making: Capable of reasoning about the input and determining the most appropriate tools to use.

- Flexibility: Able to handle unexpected inputs, rerun tools as needed, and manage concurrent tasks efficiently.

- Scalability: Efficient for complex workflows where tasks might require interaction between multiple tools and sources of information.

Examples:

- Complex Query Answering Systems: Where the agent must fetch information from various sources (like weather data and Wikipedia) and reason about how to combine the results to provide a comprehensive answer.

- Autonomous Task Automation: Agents that can manage complex processes, such as itinerary planning, where multiple sources of information must be considered and tasks adjusted dynamically.

Conclusion

Both LLM Chains and LLM Agent Executors offer powerful ways to structure and execute tasks using LangChain, but they are designed for different use cases. Understanding the key differences is essential for choosing the right approach.

- Choose LLM Chains for tasks with a clear, linear progression. These are easy to implement, have less computational complexity, and work well when the operations are predictable and straightforward.

- Opt for LLM Agent Executors for more sophisticated scenarios where the agent must reason, adapt, and make decisions dynamically. They are ideal for tasks involving multiple tools, unexpected inputs, or real-time reasoning.

By selecting the appropriate architecture — whether it’s the simplicity of LLM Chains or the adaptability of LLM Agent Executors — you can build more efficient, intelligent, and effective applications using LangChain, tailored to the specific needs of your project.

If you need further information or assistance, feel free to contact ScaleX Innovation at info@scalexi.ai.

![MCP Servers [Explained] Python and Agentic AI Tool Integration](https://miro.medium.com/v2/resize:fit:679/1*QXaHCUMHMiHQNH_QG6y5ag.png)